运动背景建模

运动估计之背景建模,混合高斯背景

相对运动的基本方式

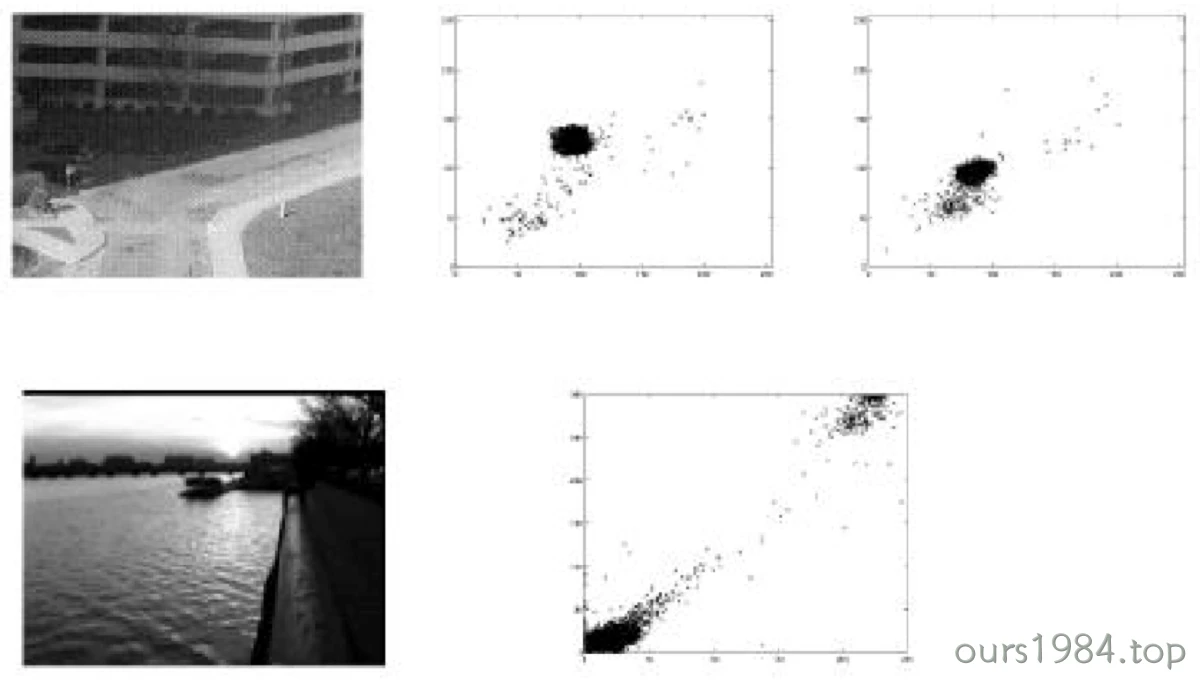

- 相机静止,目标运动--背景提取(减除)

- 相机运动,目标静止--光流估计(全局运动)

- 相机和目标均静止--光流估计

混合高斯背景

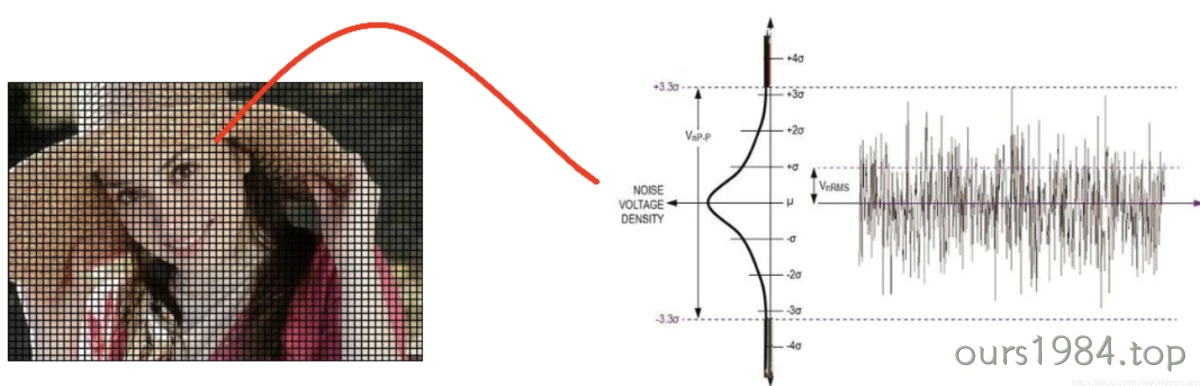

根据经验,相机所拍摄到的图像中,人眼感受到的认为不变的背景,其灰度值随时间推移呈现出高斯分布

任何一种分布函数都可以看做是多个高斯分布的组合

混合高斯模型

背景图像的每一个像素分别用由K个高斯分布构成的混合高斯模型来建模

\(I\)是输入的像素, \(N\)是混合高斯模型

\(w_q\)是混合高斯模型中第 \(q\)个高斯分布的权值

\(\mu_q\)和\(\sigma_q^2\)分别表示混合高斯模型中第\(q\)个高斯分布的均值和方差

\[ \begin{aligned} P(I)=&\sum_{q=1}^Qw_qN_q(I;\mu_q,\sigma_q^2);\\ N_q(I;\mu_q,\sigma_q^2)=&\frac1{\sqrt{2\pi}\sigma_q}e^{-\frac{(I-\mu_q)^2}{2\sigma_q^2}} \end{aligned} \]

- 建模任务即为求每一帧图像各个像素的权值、均质、方差,据此判断是否为背景,分离出前景

背景建模流程

初始化模型

选择高斯分布个数Q(通常3~7)和学习率\(\alpha\)(通常为0.01~0.1)

输入第一帧图像,初始化权值以及各个高斯分布参数 \[ w_q(1)=\frac{1.0}Q,\mu_q=I(1),\sigma_q=10(取一个较大值) \]

匹配高斯分布

获取第\(k\)帧图像,像素为\(I(k)\)

判断像素是否匹配高斯分布 \[ |I(k)-\mu_q(k-1)|<2.5\sigma_q(k-1) \]

优选多个匹配 \[ l=\begin{cases} \arg\min\limits_q[\frac{|I(k)-\mu_q(k-1)|}{\sigma_q(k-1)}]&存在q满足上式 \\ \arg\min\limits_q(w_q)&不存在q满足上式 \end{cases} \]

更新高斯参数

存在最优匹配 \[ \begin{aligned} w_q(k)=&\begin{cases} (1-\alpha)w_q(k-1),&q\neq l\\ w_q(k-1),&q=l\\ \end{cases}\\ \mu_q(k)=&\begin{cases} \mu_q(k-1),&q\neq l\\ (1-\rho)\mu_q(k-1)+\rho I(k),&q=l\\ \end{cases}\\ \sigma_q^2(k)=&\begin{cases} \sigma_q^2(k-1),&q\neq l\\ (1-\rho)\sigma_q^2(k-1)+\rho[I(k)-\mu_q(k)]^2,&q=l\\ \end{cases}\\ \rho=&\alpha N_q(I(k);\mu_q,\sigma_q^2) \end{aligned} \]

不匹配 \[ \begin{aligned} w_q(k)=&\begin{cases} w_q(k-1),&q\neq l\\ 0.5\min\limits_q\{w_q(k-1)\},&q=l \end{cases}\\ \mu_q(k)=&\begin{cases} \mu_q(k-1),&q\neq l\\ I(k),&q=l \end{cases}\\ \sigma_q^2(k)=&\begin{cases} \sigma_q^2(k-1),&q\neq l\\ 2\max\limits_q\{\sigma^2(k-1)\}&q=l \end{cases}\\ \end{aligned} \]

注意:一旦权值w发生改变,其他所有权值需要重新归一化

确定背景模型

Q个高斯分布按照\(\frac{w_q}{\sigma_q}\)的值从大到小排序

按如下公式选取前B个高斯分布作为背景像素模型 \[ B=\arg\min_b(\sum_{q=1}^{b}w_q>T) \]

T为预置的阈值(0.5~1)

判断前景

当前像素若在b个高斯分布内则为背景,否则为前景

openCV函数

/** @brief Base class for background/foreground segmentation. : |

/** @brief Creates MOG2 Background Subtractor |